(This is part one of two posts exploring building read-write Web applications using RDF. Part two will follow, shortly. Update: Part two is now available, also.)

The Web permeates our world today. Far more than static Web sites, the Web has come to be dominated by Web applications--useful software that runs inside a Web browser and on a server. And the latest trend in Web applications, Web 2.0, encourages--among other things--highly interactive Web sites with rich user interfaces featuring content from various sources around the Web integrated within the browser.

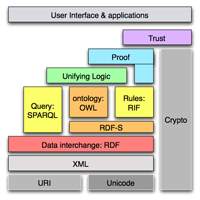

Many of us who have drank deeply from the Semantic Web Kool-Aid are excited about the potential of RDF, SPARQL, and OWL to provide flexible data modeling, easier data integration, and networked data access and query. It's no coincidence that people often refer to the Semantic Web as a web of data. And so it seems to me that RDF and friends should be well-equipped to make the task of generating new and more powerful Web mash-ups simple, elegant, and enjoyable. Yet while there are a great number of projects using Semantic Web technologies to create Web applications, there doesn't seem to have emerged any end-to-end solution for creating browser-based read-write applications using RDF which focus on data integration and ease of development.

Following a discussion on this topic at work the other day, I decided to do a brief survey of what approaches do already exist for creating RDF-based Web applications. I want to give a brief overview of several options, assess how they fit together, and then outline a vision for some missing pieces that I feel might greatly empower Web developers working with Semantic Web technologies.

First, a bit on what I'm looking for. I want to be able to quickly develop data-driven Web applications that read from and write back to RDF data sources. I'd like to exploit standard protocol and interfaces as much as possible, and limit the amount of domain-specific code that needs to be written. I'd like the infrastructure to make it as easy as possible for the application developer to retrieve data, integrate the data, and work with it in a convenient and familiar format. That is, in the end, I'm probably looking for a system that allows the developer to work with a model of simple, domain-specific JavaScript object hierarchies.

In any case, here's the survey. I've tried to include most of the systems I know of which involve RDF data on the Web, even those which are not necessarily appropriate for creating generalized RDF-based Web apps. I'll follow-up with a vision of what could be in my next post.

This is an example of a terrific project which is not what I'm looking for here. Semantic Mediawiki provides wiki markup that captures the knowledge contained within a wiki as RDF which can then be exported or queried. While an installation of Semantic Mediawiki will allow me to read and write RDF data via the Web, I am constrained within the wiki framework; further, the interface to reading and writing the RDF is markup-based rather than programmatic.

The SIMILE project provides an HTTP POST API for publishing and persisting RDF data found on local Web pages to a server-side bank (i.e. storage). They also provide a JavaScript library (BSD license) which wraps this API. While this API supports writing a particular type of RDF data to a store, it does not deal with reading arbitrary RDF from across the Web. The API also seems to require uploaded data to be serialized as RDF/XML before being sent to a Semantic Bank. This does not seem to be what I'm looking for to create RDF-based Web applications.

MIT student David Sheets created a JavaScript RDF/XML parser (W3C license). It is fully compliant with the RDF/XML specification, and as such is a great idea for any Web application which needs to gather and parse arbitrary RDF models expressed in RDF/XML. The Tabulator RDF parser populates an RDFStore object. By default, it populates an RDFIndexedFormula store, which inherits from the simpler RDFForumla store. These are rather sophisticated stores which perform (some) bnode and inverse-functional-property smushing and maintain multiple triple indexes keyed on subjects, predicates, and objects.

Clearly, this is an excellent API for developers wishing to work with the full RDF model; naturally, it is the appropriate choice for an application like the Tabulator which at its core is an application that eats, breathes, and dreams RDF data. As such, however, the model is very generic and there is no (obvious, simple) way to translate it into a domain-specific, non-RDF model to drive domain-specific Web applications. Also, the parser and store libaries are read-only: there is no capability to serialize models back to RDF/XML (or any other format) and no capability to store changes back to the source of the data.

(Thanks to Dave Brondsema for an excellent example of using the Tabulator RDF parser which clarified where the existing implementations of the RDFStore interface can be found.)

Jim Ley created perhaps the first JavaScript library for parsing and working with RDF data from JavaScript within a Web browser. Jim's parser (BSD license) handles most RDF/XML serializations and returns a simple JavaScript object which wraps an array of triples and provides methods to find triples by matching subjects, predicates, and objects (any or all of which can be wildcards). Each triple is a simple JavaScript object with the following structure:

{

subject: ...,

predicate: ...,

object: ...,

type: ...,

lang: ...,

datatype: ...

}

The type attribute can be either literal or resource, and blank nodes are represented as resources of the form genid:NNNN. This structure is a simple and straightforward representation of the RDF model. It could be relatively easily mapped into an object graph, and from there into a domain-specific object structure. The simplicity of the triple structure makes it a reasonable choice for a potential RDF/JSON serialization. More on this later.

Jim's parser also provides a simple method to serialize the JavaScript RDF model to N-Triples, though that's the closest it comes to providing support for updating source data with a changed RDF graph.

In early 2006, Masahide Kanzaki wrote a JavaScript library for parsing RDF models expressed in Turtle. This parser is licenses under the terms of the GPL 2.0 and can parse into two different formats. One of these formats is a simple list of triples, (intentionally) identical to the object structure generated by Jim Ley's RDF/XML parser. The other format is a JSON representation of the Turtle document itself. This format is appealing because a nested Turtle snippet such as:

@prefix : <http://example.org/> .

:lee :address [ :city "Cambridge" ; :state "MA" ] .

translates to this JavaScript object:

{

"@prefix": "<http://example.org/>",

"address": {

"city": "Cambridge",

"state": "MA"

}

}

While this format loses the URI of the root resource (http://example.org/lee), it provides a nicely nested object structure which could be manipulated easily with JavaScript such as:

var lee = turtle.parse_to_json(jsonStr);

var myState = lee.address.state; // this is easy and domain-specific - yay!

Of course, things get more complicated with non-empty namespace prefixes (the properties become names like ex:name which can't be accessed using the obj.prop syntax and instead need to use the obj["ex:name"] syntax). This method of parsing also does not handle Turtle files with more than a single root resource well. And an application that used this method and wanted to get at full URIs (rather than the namespace prefix artifacts of the Turtle syntax) would have to parse and resolve the namespaces prefixes itself. Still, this begins to give ideas on how we'd most like to work with our RDF data in the end within our Web app.

Masahide Kanzaki also provides a companion library which serializes an array of triples back to Turtle. As with Jim Ley's parser, this may be a first step in writing changes to the RDF back to the data's original store; such an approach requires an endpoint which accepts PUT or POSTed RDF data (in either N-Triples or Turtle syntax).

The DAWG published a Working Group Note specifying how the results of a SPARQL SELECT or ASK query can be serialized within JSON. Elias and I have also written a JavaScript library (MIT license) to issue SPARQL queries against a remote server and receive the results as JSON. By default, the JavaScript objects produced from the library match exactly the SPARQL results in JSON specification:

{

"head": { "vars": [ "book" , "title" ]

} ,

"results": { "distinct": false , "ordered": false ,

"bindings": [

{

"book": { "type": "uri" , "value": "http://example.org/book/book6" } ,

"title": { "type": "literal" , "value": "Harry Potter and the Half-Blood Prince" }

} ,

...

The library also provides a number of convenience methods which issue SPARQL queries and return the results in less verbose structures: selectValues returns an array of literal values for queries selecting a single variable; selectSingleValue returns a single literal value for queries selecting a single variable which expect to receive a single row; or selectValueArrays which returns a hash relating each of the query's variables to an array of values for that variable. I've used these convenience methods in the SPARQL calendar and SPARQL antibodies demos and found it quite easy for SPARQL queries returning small amounts of data.

Note, however, that this method does not actually work with RDF on the client side .Because it is designed for SELECT (or ASK) queries, the Web application developer ends up working with lists of values in the application (more generally, a table or result set structure). Richard Cyganiak has suggested serializing entire RDF graphs using this method by using the query SELECT ?s ?p ?o WHERE { ?s ?p ?o } and treating the three-column result set as an RDF/JSON serialization. This is a clever idea, but results in a somewhat unwieldy JavaScript object representing a list of triples: if a list of triples is my goal, I'd rather use the Jim Ley simple object format. But in general, I'd rather have my RDF in a form where I can easily traverse the graph's relationships without worrying about subjects, predicates, and objects.

Additionally, the SPARQL SELECT query approach is a read-only approach. There is no current way to modify values returned from a SPARQL query and send the modified values (along with the query) back to an endpoint to change the underlying RDF graph(s).

Benjamin Nowack implemented the SPARQL JSON results format in ARC (W3C license), and then went a bit further. He proposes three additions/modifications to the standard SPARQL JSON results which result in saved bandwidth, more directly usable structures, and the ability to instruct a SPARQL endpoint to return JavaScript above and beyond the results object itself.

- JSONC: Benjamin suggests an additional jsonc parameter to a SPARQL endpoint; the value of this parameter instructs the server to flatten certain variables in the result set. The result structure contains only the string value of the flattened variables, rather than a full structure containing type, language, and datatype information.

- JSONI: JSONI is another parameter to the SPARQL endpoint which instructs the server to return certain selected variables nested within others. Effectively, this allows certain variables within the result set to be indexed based on the values of other variables. This results in more naturally nested structures which can be more closely aligned with domain-specific models and hence more directly useful by JavaScript application developers.

- JSONP: JSONP is one solution to the problem of cross-domain XMLHttpRequest security restrictions. The jsonp parameter to a SPARQL server would specify a function name which the resulting JSON object will be wrapped in in the returned value. This allows the SPARQL endpoint to be used via a <script src="..."></script> invocation which avoids the cross-domain limitation.

The first two methods here are similar to what the sparql.js feature provides on the client side for transforming the SPARQL JSON results format. By implementing them on the server, JSONC and JSONI can save significant bandwidth when returning large result sets. However, in most cases bandwidth concerns can be alleviated by sending gzip'ed content, and performing the transforms on the client allow for a much wider range of possible transformations (and no burden on SPARQL endpoints to support various transformations for interoperability). As far as I know, ARC is currently the only SPARQL endpoint that implements JSONC and JSONI.

JSONP is a reasonable solution in some cases to solving the cross-domain XMLHttpRequest problem. I believe that other SPARQL endpoints (Joseki, for instance) implement a similar option via an HTTP parameter named callback. Unfortunately, this method often breaks down with moderate-length SPARQL queries: these queries can generate HTTP query strings which are longer than either the browser (which parses the script element) or the server is willing to handle.

Queso is the Web application framework component of the IBM Semantic Layered Research Platform. It uses the Atom Publishing Protocol to allow a browser-based Web application to read and write RDF data from a server. RDF data is generated about all Atom entries and collections that are PUT or POSTed to the server using the Atom OWL ontology. In addition, the content of Atom entries can contain RDF as either RDF/XML or as XHTML marked up with RDFa; the Queso server extracts the RDF from this content and makes it available to SPARQL querying and to other (non-Web) applications.

By using the Atom Publishing Protocol, an application working against a Queso server can both read and write RDF data from that Queso server. While Queso does contain JavaScript libraries to parse the Atom XML format into usable JavaScript objects, libraries do not yet exist to extract RDF data from the content of the Atom entries. Nor do libraries exist yet that can take RDF represented in JavaScript (perhaps in the JIm Ley fashion) and serialize it to RDF/XML inthe content of an Atom entry. Current work with Queso has focused on rendering RDFa snippets via standard HTML DOM manipulation, but have not yet worked with the actual RDF data itself. In this way, Queso is an interesting application paradigm for working with RDF data on the Web, but it does not yet provide a way to work easily with domain-specific data within a browser-based development environment.

(Before Ben, Elias, and Wing come after me with flaming torches, I should add that Queso is still very much evolving: we hope that the lessons we learn from this survey and discussion about a vision of RDF-based Web apps (in my next post) will help guide us as Queso continues to mature.)

RPC / RESTful API / the traditional approach

I debated whether to put this on here and decided it was incomplete without it. This is the paradigm that is probably most widely used and is extremely familiar. A server component interacts with one or more RDF stores and returns domain-specific structures (usually serialized as XML or JSON) to the JavaScript client in response to domain-specific API calls. This is the approach taken by an ActiveRDF application, for instance. There are plenty of examples of this style of Web application paradigm: one which we've been discussing recently is the Boca Admin client, a Web app. that Rouben is working on to help administer Boca servers.

This is a straightforward, well-understood approach to creating well-defined, scalable, and service-oriented Web applications. Yet it falls short in my evaluation in this survey because it requires a server and client to agree on a domain-specific model. This means that my client-sde code cannot integrate data from multiple endpoints across the Web unless those endpoints also agree on the domain model (or unless I write client code to parse and interpret the models returned by every endpoint I'm interested in). Of course, this method also requires the maintenance of both server-side and client-side application code, two sets of code with often radically different development needs.

This is still often a preferred approach to creating Web applications. But it's not really what I'm thinking of when I contemplate the power of driving Web apps with RDF data, and so I'm not going to discuss it further here.

That's what I've got in my survey right now. I welcome any suggestions for things that I'm missing. In my next post, I'm going to outline a vision of what I see a developer-friendly RDF-based Web application environment looking like. I'll also discuss what pieces are already implemented (mainly using systems discussed in this survey) and which are not yet implemented. There'll also be many open questions raised, I'm sure. (Update: Part two is now available, also.)

(I didn't examine which of these approaches provide support for simple inferencing of the owl:sameAs and rdfs:subPropertyOf flavor, though that would be useful to know.)